A Long journey involved with Dockerizing a NestJS app and debug efficiently.

This will be a "step-by-step" and a little explaining so you don't just copy-paste 😃.

Installation 🔨

NestJS - a NodeJS framework for server API mainly aims to be developed with TypeScript (but there's also a JS version).

Installing NestJS convenience Command Line Interface to bootstrap the project.

npm install -g @nestjs/cli // OR yarn global add @nestjs/cliDocker and Docker-Compose - Dockerizing/Packaging into a standardized unit for development, like Virtual Machines but much lighter and efficient, you can read more.

Docker is really awesome, I raise the entire deployment of multi micro-services architecture in a couple of seconds using

docker-composea wrapper CLI arounddocker-engine.IDE - I will be using Visual Studio Code

I will be using Visual Studio Code reference mostly in the Debugging part.

Project set up ⚒

Now with all of these awesome tools in our hands let's set up everything 💪!

- Starting with NestJS -

nest --help So we can create a new app -

nest new <the name of your app>Nest will bootstrap the project you will be prompted to select your favorite package management CLI.

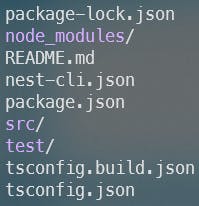

Your project folder will contain the following files/folders

Let's move to

Dockerfileand.dockerignorefiles -touch Dockerfile .dockerignoreDockerfile- we describe a set of instructions that eventually will build our Docker Image..dockerignore- we describe a set of file/folder paths to be ignored and wouldn't copy into the build context of the Dockerfile.Writing our

Dockerfileand.dockerignore-# Dockerfile FROM node:lts WORKDIR /app COPY package*.json ./ RUN npm install COPY . . CMD ["npm","run","start"]Breaking things up -

FROM node:lts-FROMtells our build context which image are we basing/extending on in our build context,node:ltsi choose an official image of NodeJS so basically it isIMAGE:TAGwhereTAGstands for version typically aligned with SemVer (Semantic Versioning).So

node:ltsis NodeJS precompiled Docker image with Npm & Yarn CLI installed (You can view their Dockerfile here ).IF I was to create a Java project I would have probably use an OpenJDK image to compile my Java project.

WORKDIR- short term for the working directory, we define in which directory are we going to work on INSIDE THE BUILD CONTEXT AND THE FUTURE CREATED IMAGE.Browsing the Internet you will see diverse answers around where the

WORKDIRshould be, eventually, it is your preference!I use

/appmost of the time.NOTE - it is best practice to not move through directories outside of the WORKDIR in the further instructions given to the Dockerfile (build context...)

COPY package*.json ./-COPYwould copy files from side to side, the left side is the host computer (where you ran docker build if haven't run it from a different folder using the -f flag), so I tell the build contextCOPYmypackage.jsonandpackage-lock.jsonto./so if you are a bit familiar with "FileSystems".stands for the current directory and I add the/just to be sure that I copy multiple files into the current folder.RUN npm install-RUNexecutes the command as you do in your Console (be aware we are always in theWORKDIRwe specified).So I just ran

npm installto install all dependencies/devDependencies inside the build context.COPY . .- after installed everything successfully (hopefully) we copy the entire project directory into our working directory.I usually copy just the package*.json and install before copying everything so if something fails with

npm installit will break the build before I try to copy everything else.CMD ["npm","run","start"]- the command that will be triggered when we raise the image to a running container.So I will be using a script as specified in the

package.json.Note for docker images of CLI you better use

ENTRYPOINTyou can read more here.

That's it for now with the Dockerfile.

# .dockerignore

dist

node_modules

*.log

I Specify which file/folders I wouldn't like to be copied into my image.

node_modules - I would like the node_modules to be installed from the image as all of the modules which require binaries of Linux like ( gcc ) will be compiled using the binaries that are pre-compiled into the node:lts image.

dist - Later when we modify the Dockerfile for build/production purposes I wouldn't like that in any case my host dist folder will be copied and affect my build output.

*.log - I just don't like log files :).

Building the image and running it 🐳

Build -

docker build -t <your image name>:1.0.0 .We initiate a docker command to build an image with a specified tag (-t) at the

.current directory (where our project is and the docker files).This can take a couple of seconds/minutes depending on your project dependencies and Ethernet speed.

Running the ready image -

docker run <you image name>:1.0.0Your Console STDIN will be directed into the newly created container of the image you built.

You can

CTRL+Cto get out but it will shut your container down.To keep the container running you can run it detached using the

-dflag when using thedocker run.If running in detached you can initiate commands on the running container using the

docker execcommand.If running in detached in order to stop the container you can use

docker container stop < name of the *container* >/< hash of the *container* >

Docker-Compose orchestrate Docker infrastructure 🐳

A bit of overkill for a single service but for 2 and above this is just fantastic.

touch docker-compose.yml

We create a docker-compose.yml file (default file name by the CLI of docker-compose)

⚠ docker-compose.yml is a bit long to explain so i documented on the code itself and you can read more here

version: "3.8" # Specify the version of docker-compose we will use

services: # Specify our services

backend: # define our first service

build: # define the build context

dockerfile: ./Dockerfile # the docker file to use by default it looks for Dockerfile in the context dir so we can omit this key :)

context: . # where is the build context folder

image: custom_image_name:1.0.0 # in case we are building the built image name will be from the image entry

environment:

NODE_ENV: development

PORT: 3000

ports:

- 3000:3000 # <host port> : <container port>

- 9229:9229 # 9229 is the default node debug port

volumes:

- "/app/node_modules" # save the compiled node_modules to anonymous volume so make sure we don't attach the volume to our host node_modules

- "./:/app" # link our project directory to the docker directory so any change will get updated in the running container and also we will benefit from sourcemaps for debugging

So now when we have in our hands the docker-compose file ready just:

docker-compose up -d

# '-d' - for detached

The Docker-compose on the first time will build the image and raise it entirely as specified in the docker-compose.yml

Why compose? for our first service without using docker-compose inorder to raise it as specified we had to build it and then run it as so:

docker run -d -p '3000:3000' -p '9229:9229' -e 'NODE_ENV=development' -e 'PORT=3000' -v '${PWD}:/app' -v '/app/node_modules'and thats for one service imagine your self raising microservice architecture of 8+ services.... INSANITY 😵.

Debugging 🐛

package.json

// change start:debug script

"start:debug": "nest start --debug 0.0.0.0:9229 --watch",

By using 0.0.0.0 address inside the docker we allow access from the external network (our host) into the debugger in the container.

Docker-compose.yml we must override the default command in order to start with the debug command:

version: "3.8"

services:

backend:

build:

dockerfile: ./Dockerfile

context: .

image: custom_image_name:1.0.0

environment:

NODE_ENV: development

PORT: 3000

ports:

- 3000:3000

- 9229:9229

volumes:

- "/app/node_modules"

- "./:/app"

command: npm run start:debug # override entry command

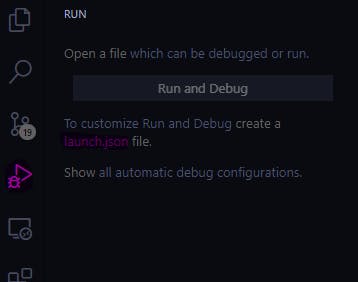

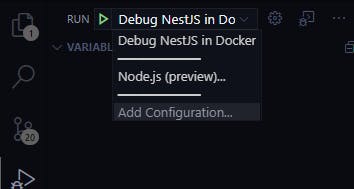

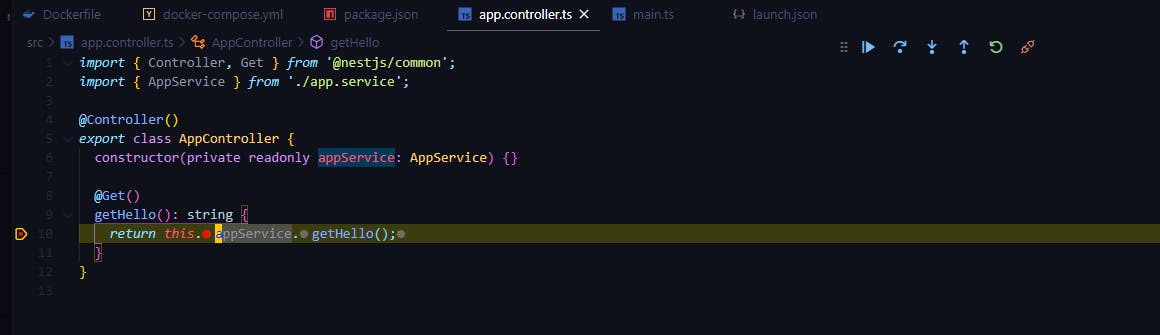

VSCode:

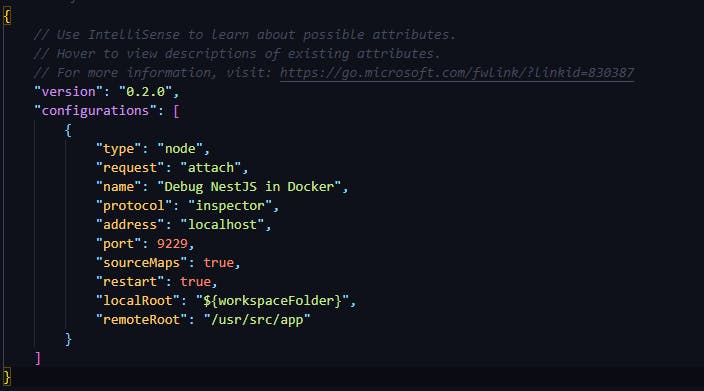

It will create a launch.json file where you specify the debug configurations.

And adjust it as so:

Breaking things up:

type - the type of debugger in our case is node debugger.

request - we use attach to attach into running debug instance, launch is to raise a debug instance and attach to it.

name - a name given to the debug configuration.

protocol - the NodeJS debugger protocol to use (you can read more here ).

address - the remote address to look out for the debugging instance by default when we raise with docker-compose it uses a network driver that uses the host namespace ( you can read more about host network driver here )

port - the debugging port to attach to (we use the NodeJS default port 9229)

sourceMaps - our project is bootstrapped with NestJs by default it ships with typescript so when typescript compiles it by default ships with source maps so when we place breakpoints in our typescript it translates the breakpoint location to the compiled version (JavaScript).

restart - we set true so the debugger will try to reattach upon disconnection

localRoot - where are the local files in the host machine.

remoteRoot - where are the files in the remote (the running container).

Most of the disconnections are caused by Hot Reloading the code due to code change.

If you bootstrap a project of your own(and not from NestJS) make sure source maps is enabled in

tsconfig.jsonin order to enable debugging.

And you are welcome

If you enjoyed this blog post subscribe and enjoy awesome Js/Ts/Development Content that I will release on weekly basis.